The AI Developer Productivity Paradox in 2025

Why AI feels faster but delivery slows, and how to fix it.

Features and findings current as of October 2025. Google Cloud, DORA and Atlassian are trademarks of their respective owners.

The primary drag on AI developer productivity isn’t the models; it’s the lack of system-level context and governance. Teams that adopt paved-road workflows with guardrails and an expert in the loop convert local speed into stable, end-to-end throughput.

Evidence at a glance (2024–2025):

• RCT (experienced OSS devs): AI allowed → +19% task time vs. control; effort felt lower.

• DORA: Successful AI adoption is a systems problem; value-stream practices needed to avoid downstream chaos.

• Atlassian DevEx 2025: 68% save 10+ hours/week with AI, yet losses to org friction cancel gains.

• AI Index 2025: Broadly positive productivity signal across the workforce, with narrowing skill gaps.

The Evidence

A randomized controlled trial from mid-2025. In Measuring the Impact of Early-2025 AI on Experienced Open-Source Developer Productivity, Becker, Rush, and colleagues studied seasoned contributors doing real tasks in mature repos they know well. Despite forecasting ~24% speed-ups, actual task time increased by 19% when AI was allowed. Developers still preferred AI because the work felt easier — more editing than creation. The authors note effects may differ for junior devs or unfamiliar codebases.

Reuters summarized the same finding: experienced developers on familiar codebases slowed down with AI, largely due to review and correction overhead, even though expectations were the opposite.

Zooming out, the macro signal is positive. The Stanford AI Index 2025 synthesizes dozens of studies and concludes AI generally boosts productivity and often narrows skill gaps, while urging better measurement and governance to unlock durable gains.

DORA’s 2024–2025 view: make it a systems project. DORA emphasizes that adopting AI isn’t about sprinkling tools into editors; it’s about capabilities across value streams. Their 2025 AI report explicitly frames AI adoption as a systems problem, recommending paved-road practices and governance to keep local accelerations from degrading downstream reliability.

Developer experience still hits friction. Atlassian’s State of DevEx 2025 surveyed 3,500 practitioners: 68% report saving 10+ hours/week with AI, yet teams simultaneously lose significant time to non-coding inefficiencies like knowledge findability, coordination, and tool switching — effectively canceling the gains.

Why Gains Stall

- Missing systems context. AI suggestions are often “directionally right” but miss implicit domain rules, service contracts, or infra invariants. Experts then spend cycles validating and reworking drafts, eroding headline speed.

- Under-governed pipelines. Without policy-as-code (SAST/DAST, secrets, SBOM, approvals), local accelerations leak as stability debt that shows up later as incidents or hotfix churn.

- Friction and findability. Documentation lag, scattered repos, and poor discoverability wipe out perceived time savings — a consistent 2025 DevEx theme.

Key definitions (quick refresher)

- Systems context: The web of domain rules, service contracts, infra constraints, and compliance norms that define “correct” in your stack.

- Paved road: An endorsed, opinionated workflow with golden templates, automation, and guardrails that reduce decision load and variance.

- Guardrails: Enforced checks (SAST/DAST, secrets scanning, SBOMs, approvals) and change policies that protect reliability.

Where AI Helps (Today)

- Scaffolding & first drafts for modules, tests, scripts, and migrations, shrinking ramp-up time and cognitive load.

- Review assistance that summarizes diffs, flags potential risks, and proposes patches for human curation, faster local loops when governed.

- Documentation generation (READMEs, ADRs, runbooks) that reduces onboarding drag and eases cross-team handoffs.

- Cross-stack translation and API usage examples that unblock integration tasks and standardize patterns.

Where It Hurts (Without Guardrails)

- Intent mismatch on mature systems leads to long edit-and-verify loops.

- Hidden stability costs surface as change-failure and hotfix churn downstream.

- Prompt thrash & context switching add overhead that doesn’t show in simple output metrics.

Measure What Matters

Move beyond “PRs merged” and time-to-type. Track end-to-end, flow-oriented outcomes:

- Flow efficiency, lead time for changes, deployment frequency

- Change failure rate (CFR), MTTR, rework ratio

- Code churn, rollback rate, and time to usable docs after a merge

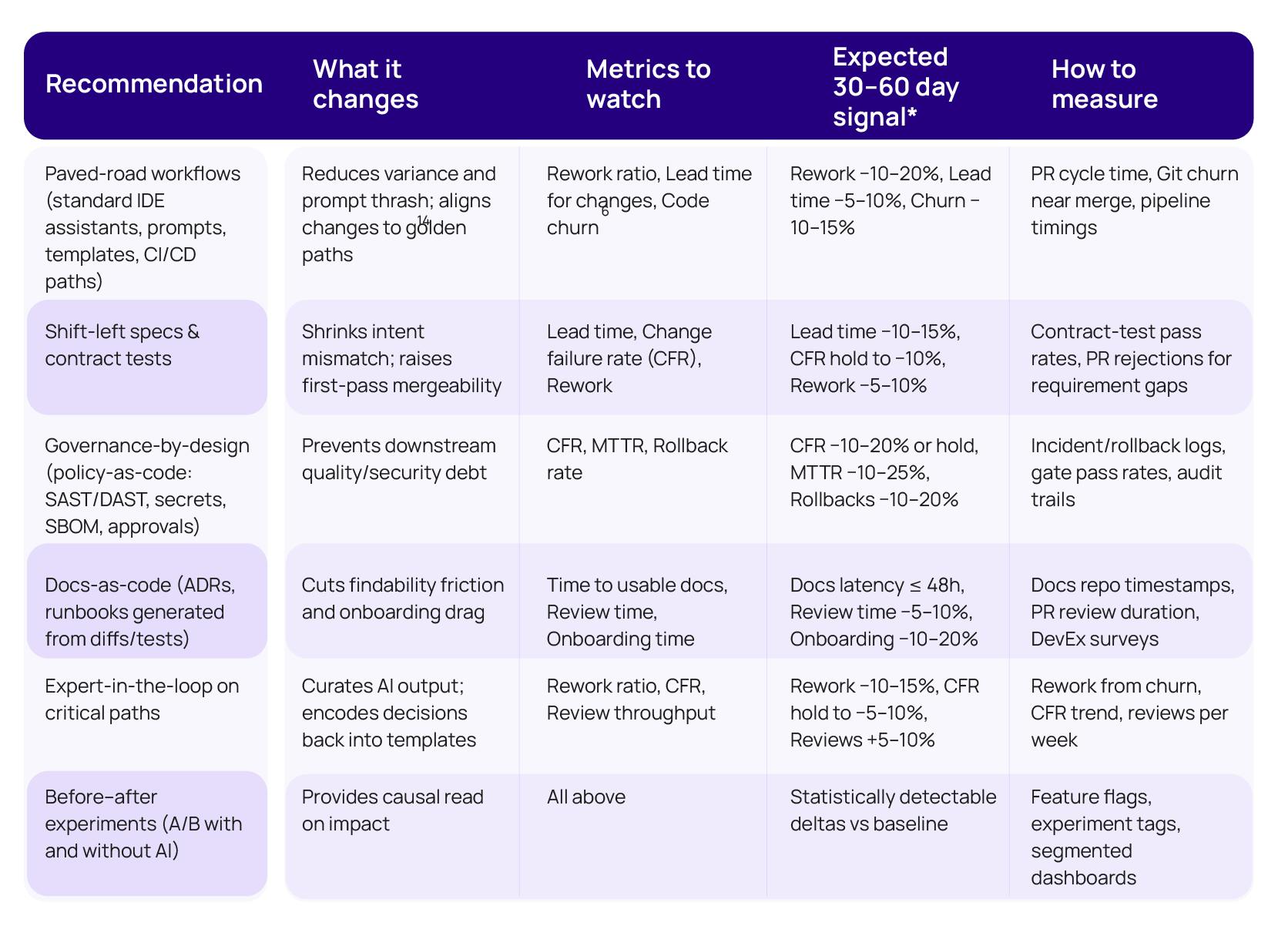

Practice-to-Metrics Impact Map (30–60 day pilot)

How recommended practices translate into measurable outcomes. Targets are directional; baseline first and validate in your environment.

*Directional targets for pilots on 1–2 services with paved roads enabled.

A 2025 Playbook That Works

1) Put AI inside paved-road workflows. Standardize assistants, org-wide prompts, context windows, and golden templates wired into CI/CD. Why it works: reduces variance and prompt thrash; aligns to conventions. Expected impact: −10–20% rework, −5–10% lead time in 30–60 days (pilot scope). Google Cloud

2) Shift left on specification. Use crisp acceptance criteria, contract tests, and lightweight ADRs so AI drafts match domain rules on first pass. Expected impact: −10–15% lead time and stable CFR while increasing throughput.

3) Governance-by-design. Enforce policy-as-code (SAST/DAST, secrets scanning, SBOM provenance, approvals) by default so local speed doesn’t erode stability. Expected impact: CFR steady or improving, MTTR down when incidents occur.

4) Docs as code. Autogenerate runbooks and ADRs from diffs and test runs so knowledge ships with code and cuts findability friction. Expected impact: faster onboarding and smoother handoffs (aligns with DevEx data).

5) Expert-in-the-loop. Pair senior reviewers with AI on critical paths; encode decisions back into templates and prompts. Expected impact: rework down, stability preserved as adoption scales.

6) Measure with before–after experiments. Run A/B periods with and without AI on a small scope (1–2 services) to isolate real impact across the KPI stack. Expected impact: org-specific deltas grounded in your telemetry.

How Devsu + VelX help: VelX brings paved-road templates, policy gates, audit trails, and expert-in-the-loop as pre-built playbooks you can drop into your pipelines, so AI-assisted changes inherit best practices by default. (Ask us for our KPI checklist and Guardrails Blueprint.)

Looking Ahead (2026–2027)

- Agentic workflows mature. Multistep agents that call internal tools and encode your invariants should reduce intent mismatch and narrow the RCT-style slowdowns.

- Stability rebounds as practices catch up. DORA’s guidance points to value-stream and governance capabilities that preserve delivery stability while review speed and lead-time gains persist.

- DevEx becomes the multiplier. Fix docs and discovery so saved hours actually move product forward, not into the friction sink.

TL;DR (for quick quoting)

- The paradox in 2025 AI can lower effort while increasing elapsed time on expert tasks in familiar codebases.

- Treat AI adoption as a systems initiative (value streams, paved roads, governance), not a tools rollout.

- The macro signal remains positive; AI tends to boost productivity and narrow skill gaps when governed well.

- DevEx friction cancels many wins; address knowledge findability and coordination.

- Start with a 30-day pilot on 1–2 services; target −10–20% rework and −5–15% lead time while holding CFR.

Subscribe to our newsletter

Stay informed with the latest insights and trends in the industry

You may also like